Exploring the Claude Agent SDK

Learning in public: experimenting with Anthropic's Agent SDK after hearing that anything Claude Code can do, you can do with the SDK.

Learning in public: experimenting with Anthropic's Agent SDK after hearing that anything Claude Code can do, you can do with the SDK.

This is my recap of Hamel and Shreya's LLM evaluation course. I'm hoping I come back here in the future every time I need to remind myself of how to do this the right way.

Notes from the final lesson of Hamel and Shreya's LLM evaluation course - practical strategies for improving accuracy and reducing costs through prompt refinement, architecture changes, fine-tuning, and model cascades.

Notes from lesson 7 of Hamel and Shreya's LLM evaluation course - interface design principles and strategic sampling.

How to set up a persistent Docker environment for AI coding tools without losing your authentication every time you restart the container.

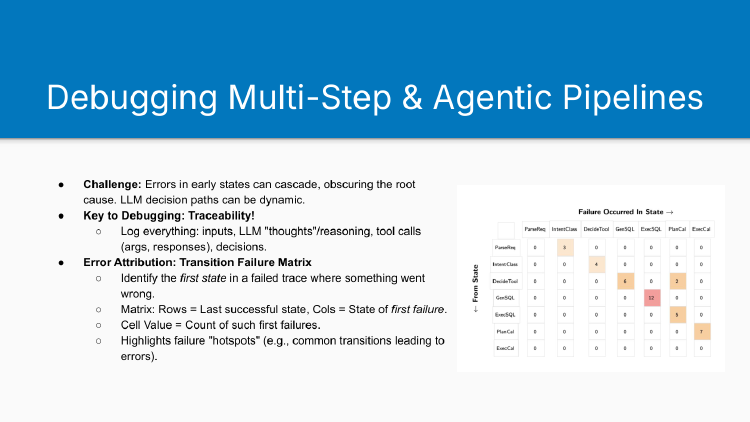

Notes from lesson 6 of Hamel and Shreya's LLM evaluation course - debugging agentic systems, handling complex data modalities, and implementing CI/CD for production LLM applications.