Claude Agent SDK Part 5: Editing Files with Checkpointing

Adding the ability for the agent to create posts that follow my templates, with the ability to recover from mistakes.

Adding the ability for the agent to create posts that follow my templates, with the ability to recover from mistakes.

Building context profiles and usage tracking that works with the SDK's design.

Discovering the trade-offs between agency and control when building on the Claude Agent SDK.

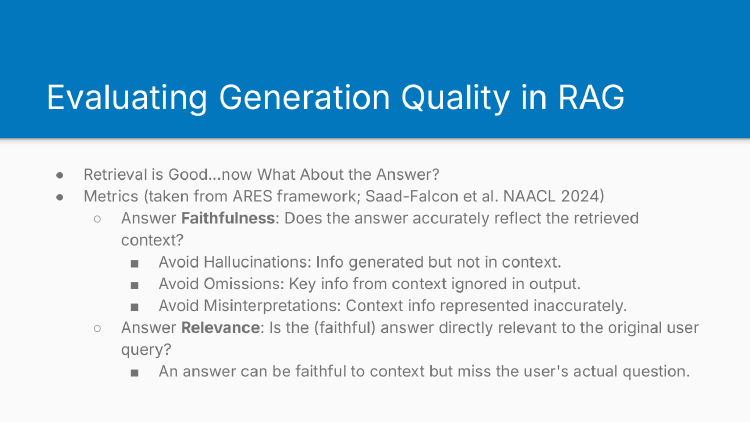

Notes from lesson 5 of Hamel and Shreya's LLM evaluation course - evaluating retrieval quality, generation quality, and common pitfalls in RAG systems.

Swyx argues for 2025-2035 as the decade of AI agents, backed by unprecedented infrastructure investment and converging technical definitions.

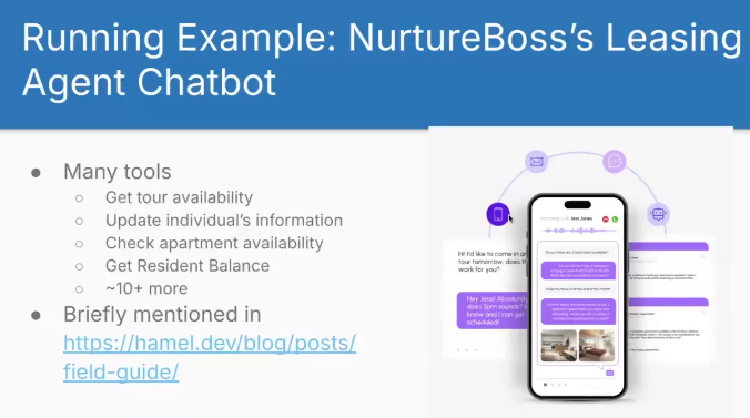

Notes from lesson 4 of Hamel and Shreya's LLM evaluation course - handling multi-turn conversations and building evaluation criteria through collaboration.

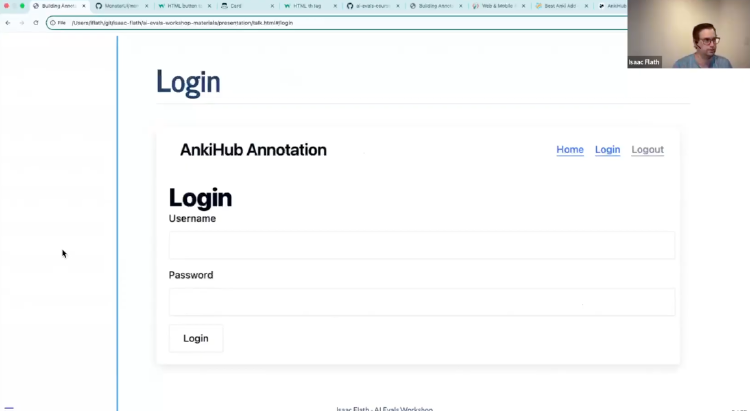

How Isaac Flath built a medical flashcard annotation tool for AnkiHub using FastHTML, and why custom annotation tools beat generic ones for complex domains.

Deployment options for FastHTML applications.

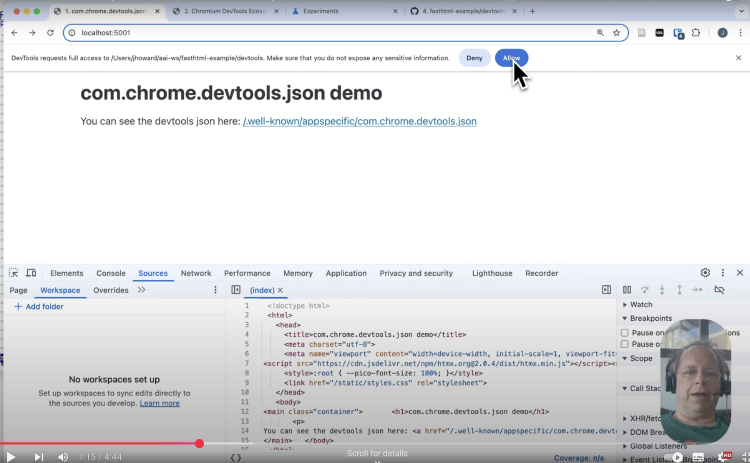

How to connect Chrome DevTools to your FastHTML applications for fast CSS and HTML debugging and iteration during development.