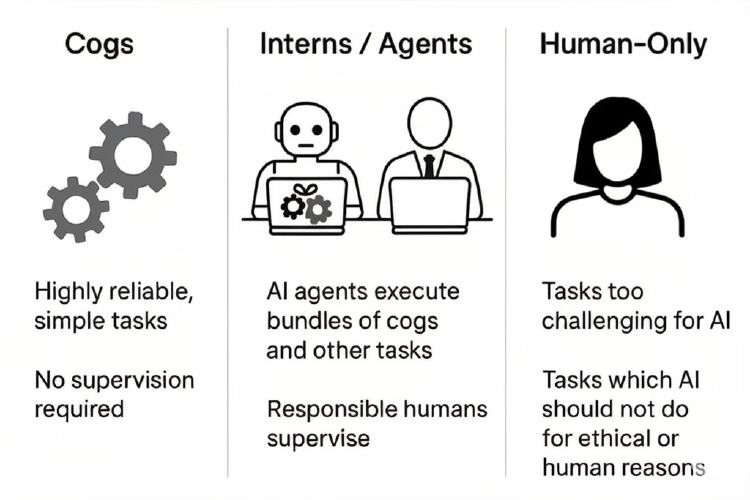

Cogs / Interns / Human Tasks, a practical framework for AI transformation

Trying to blend together two AI Framework styling into one that's more practically useful

- David Gérouville-Farrell

- 2 min read

A while back, Drew Brunig’s classification of AI capabilities as “Cogs, Interns, and Gods” went viral on Twitter. I like it. It’s punchy. It’s powerful. I use it all the time, but I really dislike the Gods framing - even Brunig acknowledges these (superintelligent AI agents) don’t exist yet, so it’s really redundant.

You basically can’t do anything with it.

I also like Ethan Mollick’s framework of “Automated”, “Delegated”, and “Just Me” as a way to talk about how we work with AI. But “Just Me” feels clumsy in professional contexts, and “Delegated” just doesn’t capture the dynamic back-and-forth relationship between humans and AI systems.

I’m using this blended framework now and I’m finding it really helpful. People understand it at face value and it gives us nice buckets to put things into in order to plan a real practical transformation project.

- Cogs

- Well-defined tasks that AI performs exceptionally well and can be trusted to do without supervision. Could be a simple ML type AI. Could be a full on Agent. Key thing is - v v v reliable, basically never fails. Full automation.

- Brunig and Mollick have similar definitions. Brunig’s word is better, so I use Cogs.

- AI Interns

- Bundles of cogs and / or other more complicated tasks that are involve AI or are executed by AI Agents - supervised by responsible humans.

- Think co-drafting a memo with ChatGPT. Think Deep Research doing a bunch of basic work for you, that you then take as a starting point for your own outputs.

- Borrowing here from both frameworks - “Intern” communicates perfectly the relationship between human and AI, but Ethan’s “bundles of tasks” concept makes it clearer what exactly is happening here.

- Bundles of cogs and / or other more complicated tasks that are involve AI or are executed by AI Agents - supervised by responsible humans.

- Human-Only

- Leaning more on Mollick’s framework here (“Just me” - but with better wording), there are two types of human-only tasks:

- Tasks which AI just can’t do very well, even with supervision. In practice, over time, these will move towards the intern level.

- Tasks which, for ethical or fundamentally human reasons, we don’t want AI to do.

- Leaning more on Mollick’s framework here (“Just me” - but with better wording), there are two types of human-only tasks:

This is working really well for me. I wrote a longer piece explaining how I got here, if anyone wants to read. You can find it here.