LLM Evals Course Lesson 6: Complex Pipelines and CI/CD

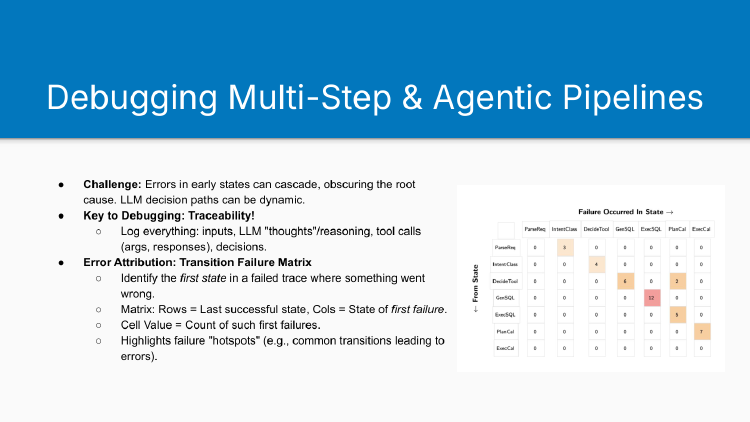

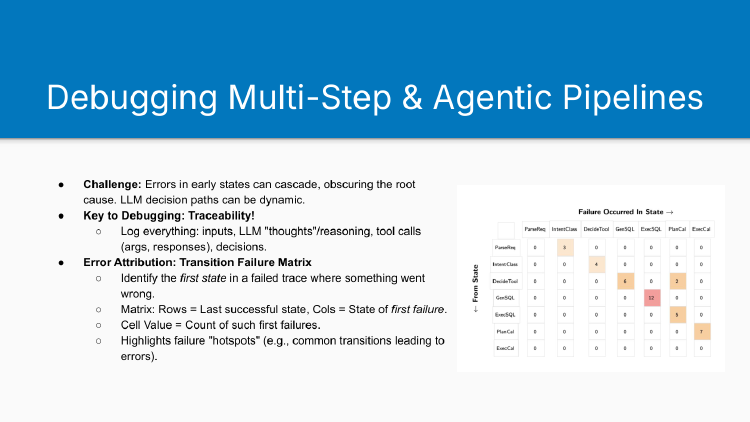

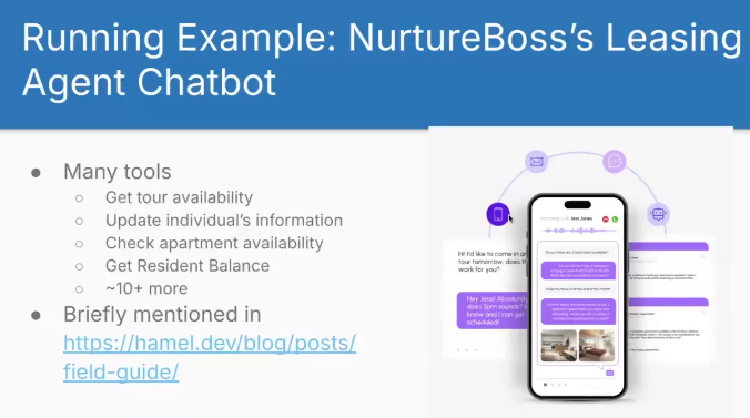

Notes from lesson 6 of Hamel and Shreya's LLM evaluation course - debugging agentic systems, handling complex data modalities, and implementing CI/CD for production LLM applications.

Notes from lesson 6 of Hamel and Shreya's LLM evaluation course - debugging agentic systems, handling complex data modalities, and implementing CI/CD for production LLM applications.

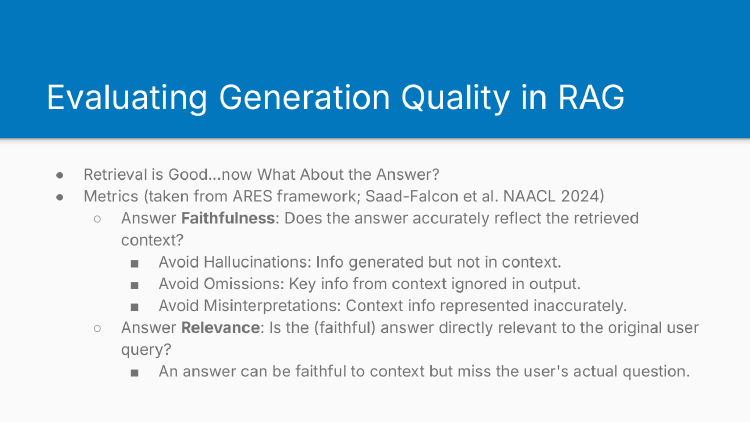

Notes from lesson 5 of Hamel and Shreya's LLM evaluation course - evaluating retrieval quality, generation quality, and common pitfalls in RAG systems.

Swyx argues for 2025-2035 as the decade of AI agents, backed by unprecedented infrastructure investment and converging technical definitions.

Notes from lesson 4 of Hamel and Shreya's LLM evaluation course - handling multi-turn conversations and building evaluation criteria through collaboration.

How Isaac Flath built a medical flashcard annotation tool for AnkiHub using FastHTML, and why custom annotation tools beat generic ones for complex domains.

Deployment options for FastHTML applications.